Murphy's Laws of Combat state that "The most dangerous thing in the world is a Second Lieutenant with a map and a compass." - compare here. Similarly, in the world of soft science, few is to be dreaded more than a writer with an agenda and colourful bullshitting skills. The tricky thing about bullshit is that it's difficult to distinguish from reasonable arguments - both may use some combination of words, graphs and numbers. There's nothing inherently bullshitty about those things, but like a map and a compass, you can do all kinds of stupid things with them.

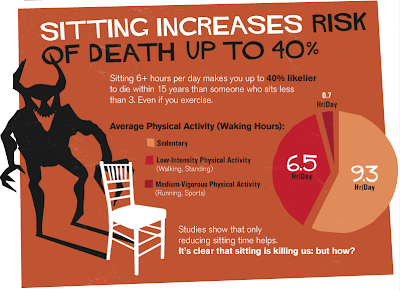

I recently stumbled upon a fascinating little "infographic" about what seems to be the biggest menace to the western world since Hitler: sitting down! A certain degree of physical activity is healthy - that seems to be quite an agreeable theory. But the graphic conveys a warning to us that is way more radical:

Wow! Now that's a scary message, especially since you're probably sitting on your chair or couch right now. So what is that figurative monster that lurks behind you while you are peacefully staring at your monitor? Let's try and follow the arguments that are presented in the graphic. So, how and why does sitting kill us?

According to the graphic, sitting increases risk of death. Risk of death is apparently defined as likelihood to die within 15 years. So, if you are sitting six hours or more per day, you are 40% more likely to die in the next 15 years than someone who sits less than 3.

So what's the bullshit here? Well, first of all, the graphic does not tell us who is compared with whom. The source doesn't tell us, it only indicates that the info is based on "modern Americans".

But the serious bullshit lies in making an assumption about causality from correlations. If we take a thousand people who sit a lot and compare them to a thousand people who don't like sitting, we might get a result similar to the one that is implied in the graphic: the sitters are more likely to die in the next fifteen years. So mortality rate and sitting habits correlate - there is a statistical connection between them. But that does not necessarily mean that sitting causes death, because correlation does not imply causality - as often, there is a good wikipedia article on that topic. There might be a common cause to death and sitting that explains at least some of the statistical connection. People who are chronically sick are both more likely to die and more likely to be physically unable to stand or walk around a lot. Older people in general - even those who are relatively healthy - might be more prone to sit, as they may be less required to be physically active because they retired from work or because they do not have children to keep them on their feet. Of course, older people are statistically more likely to die within the next fifteen years than the average. Conversely, toddlers and children are (thanks to modern medicine) very unlikely to die within fifteen years. Most of them also tend to be more physically active than adults. The graphic does not tell us that there may be common causes for sitting a lot and for a higher "death risk" - factors like age or health status. The causal connection between sitting and dying - meaning that the former causes the latter - is probably way less severe; most of the 40% - if that's even a reasonable number - are explained by a common cause, they merely present a correlation.

"Obese people sit for 2,5 more hours per day than thin people". That leaves us clueless: is sitting making people obese, or do obese people like to sit more than thinner people? Thankfully, the graphic spells it out: "Sitting makes us fat". It tells us that in twenty years, obesity doubled. Sitting time increased for 8%, while excercise rates stayed the same. This is probably meant to imply that a slight increase in sitting time causes a significant increase in obesity. But that's bullshit, again: Exercise rates and sitting time are not the only factors for obesity. Whether people with a realtively healthy metabolism gain or lose weight depends on how many energy they acquire by eating, and how many energy they spend. Both assumed causes of obesity are on the spending end; the graphic tells us nothing about how eating habits changed between 1980 and 2000. But without additional information about both eating habits and energy-spending habits, our knowledge base is too small to say anything of significance about the causes of obesity. I could as well tell you that, between 1980 and 2000, excercise rates stayed the same, while the number of nations that have won the fifa world cup increased by 17%, from 6 to 7. Obesity doubled. So obesity doubles whenever there is a first-time fifa world cup winner? Of course not - my litte example is obviously a correlation, and without additional data, there is no reason to consider tha graphic's information to be anything more than that.

Now let's take a closer look to the graph on the bottom:

If we compare the length of the bars for "standing" and "walking", by counting the number of colums that they fill, we learn that the "% energy increase above sitting" of walking is 15 times higher than the energy increase of standing. That does not mean that walking burns fiften times as many calories as standing does. It only means that standing burns 10% more calories than sitting, while walking burns 150% more calories than sitting. If sitting burns 1 calorie per minute (as the graphic will states further below), standing burns 1,1 calories/m, while walking burns 2,5 calories per minute. That's not that much of a difference - but what we see is a very small bar next to "standing", and a comparatively huge bar next to "walking". That's bullshit at its best - there is no lying involved, and yet the reader is screwed over if he or she does not pay attention. If our bullshit-o-meter is not well-calibrated, we might fall for the graph and think that walking burns 15 times as many calories as standing does.

The artwork involved in the "infographic" is well-done. Just look at that nice dark dragon:

Even without reading the graphs and text, those shadowy demons and dragons make it clear to anyone: sitting is bad! Sitting is bad! While technically not bullshit, involving symbols of fear and death in the background of an "infographic" is at least quite manipulative, although I'd let them off the hook on that one, for the artistic effort. But there's one last piece of bullshit involved in the last "page": the good old historical argument:

"A hundred years ago, when we were all out toiling in the fields and factories, obesity was basically nonexistent". That may be true, but if you want to argue that sitting kills, while not sitting ("toiling in the fields" for example) is safe, you should keep your hands away from arguments like this. In 1900, life expectancy in the USA was 46,3 for men, 48,3 for women. In 1998, whe have numbers of 73,8 for men and 79,5 for women (source). Differences of life expectancy often seem more drastic because one of their main cause is infant mortality - a U.S. adult in 1900 could probably expect to live to at least 50 or 60 years of age. Still, while people in 1900 were thinner, they were not necessarily healthier - field and factory work without automated machinery and advanced farming equipment is hardly a healthy field of occupation. So I wonder, what exactly was the point in talking about how people lived a hundred years ago? The graph tells us that we have to "help our bodies in other ways" since "we can't exactly run free in the fields". But "the fields" were a place where most people used to plow and to pluck, not run around freely. The last page of the "infographic" confuses us with the notion that people used to be healthier when they worked in factories and fields, which is probably not a historical truth. With false assumptions like that, we cannot hope to gain anything but unproven conclusions - that's just bad soft science.

While the creators of the graphic certainly had good intentions, their argument contains correlations disguised as causality, confusing graphs, and false assumptions - or bullshit, if you will. But using our bullshit-o-meters, we can have a lot of fun with graphics like these, by turning their insults to our intelligence into a nice little training excercise for our soft science skills.

Montag, 30. Mai 2011

Mittwoch, 25. Mai 2011

Hard Science vs. Soft Science (long version)

This is supposed to be a blog about 'soft science'. No, I don't want to bash 'soft scientists', at least not all of them. But bashing has something to do with this blog's purpose. The patterns of disdain between different fields of science is pretty complex; one exemplaric way to bring order in the world of interdisciplinary academic bashing - sorting the sciences by "purity" - is illustrated by xkcd. A rather simple way to speak about the "worthiness" of different scientific fields is to divide them into "hard" and "soft" sciences. Although not always using those terms, the idea of "hard and "soft" sciences is widely spread - and ultimatively I want to argue that this is a bad thing. The 'soft sciences' are not as useless as they may seem, which I will try to demonstrate in this blog.

If you find the concept of "hard and soft science" to be even remotely interesting, you might already have checked the wikipedia entry. If you haven't, that's not a problem, as I will quote it anyway:

"Hard science and soft science are colloquial terms often used when comparing fields of academic research or scholarship, with hard meaning perceived as being more scientific, rigorous, or accurate. Fields of the natural, physical, and computing sciences are often described as hard, while the social sciences and similar fields are often described as soft. The hard sciences are characterized as relying on experimental, empirical, quantifiable data, relying on the scientific method, and focusing on accuracy and objectivity." (Source: Wikipedia, 25.05.2011)

Apparently, what makes science "hard" is the scientific method: theories are tested and modified using empirical data and/or experiments. The availability of accurate empirical and experimental data depends heavily on the field of study. If a phenomenon can be repeatedly measured in a way that provides data that can be put in numbers, you have "accurate" empirical data. If you can repeatedly set a certain process in motion, having exactly the same starting position every time, you have an accurate experiment.

Those two things are rather possible in physics, for example - you can build a machine that measures the boiling point of water, a hundred or a thousand times in a row, hence receiving "accurate" data about when the water will reach its boiling point. On the basis of that data, you can predict when the water will boil on your one thousand and first experiment - thus proving your theory about the boiling point of water. If, however, your field of study is human society or culture, it is difficult to get quantifiable numbers, and even more difficult to set up experiments. The number of factors that you have to consider are much higher with people than with atoms, molecules, dirt or bacteria. In our little water-boiling-point-experiment, we might oversee the influence of air pressure on the boiling point - but once we have figured that out, we can compare the boiling point of water in different heights and improve our theory.

To illustrate the difficulty in doing research on humans, I have come up with a purely fictional experiment. We would try to measure people's metaphorical "boiling point" - by testing how often you have to give a person electric shocks until they complain. We have a lot of factors that could be relevant. That particular "boiling point" could depend on age, culture, class, sex, gender, body weight, historical period, mood, you name it - not to speak of biographical factors that are different for every person. We could, for example, shock 10000 middle-aged single women from denmark and get a result of an average 3,7 20-volt-shocks until they complain. A comparing group of 10000 middle-aged single women from france gets a result of an average 5,3 20-volt-shocks until they complain. While those numbers would certainly make for a fun little text in a tabloid, what theory could we construct based on those values? We could assert that french women are tougher - or we could argue that danish women complain earlier. Even if we find a way to keep those two factors apart - for example by making experiments with the same people that make them complain, but without causing any pain - what information do we get? We may conclude that danish middle-aged single women from denmark complain earlier than french middle-aged single women, but how do we explain that? Is it more acceptable in "danish" culture to complain? Do middle-aged people in denmark generally complain more than middle-aged people in france? As we see, even with more or less "quantifiable" studies, we would need to do a LOT of experimenting to establish "facts" about even a very specified "type" of people. Also, any theory based on this fictional experiment would only apply to the time frame in which it was conducted. Who knows, maybe french mainstream culture shifts towards earlier expression of pain in the next decade. People are complicated, and so are culture and society. And how do we define "french" and "danish" anyway? How do we define "woman", "middle-age", "single"? And how do we account for the unknown number of people from both countrys who are not brave (or stupid) enough to participate in an experiment that involves you getting shocked?

This was just one fictional experiment, but it's safe to assume that coming up with reliable ways to classify and measure people and comping up with stable environments for experiments with people is much, much more complicated than with less complex phenomena. Also, there are many things we cannot possibly measure at all - we cannot conduct experiments on people from different eras due to a lack of time travel technology, for example.

So, okay, experiments and empirical data are less accurate and more difficult to compare in social and cultural sciences than in biology, physics or chemistry. Point taken. "Hard sciences" operate with less complex data and can therefore generate and quantify empirical data easier and more precise. Of course, that does not mean that social and cultural sciences are more complex than natural sciences - but their data is more complex. "Using empirical and experimental data" is not a good definition for what science should be. We can argue that "hard sciences" have more objective and accurate data than "soft sciences". But that does not mean that there is no accuracy and no objectivity in "soft sciences". Answering a question scientifically means trying as hard as you can to be accurate and objective - in natural sciences, that means using empirical and experimental data. In social and cultural sciences, that mostly means requiring good reasons and logical proof for everything you say.

It is even wrong to call natural sciences "more accurate" than social and cultural sciences because their main difference is not the method, but the questions. Explaining natural phenomena with the methods of social science would be inaccurate, but it's the same way around. If your question is: "What effect did the invention of the atom bomb have on the american culture in the 1950s", using experiments or huge bulks of quantifiable data will not lead to an answer. Instead, you would have to read and analyse sources like books, magazines, movies, comics etc. As "cultural impact" is difficult to quantify, historians and sociologists would need to carefully construct theories based on those sources, and argue with each other about how important certain aspects are. While we will never get a perfect one-page-answer to a question like that, our theories on social and cultural questions are constantly tested, evalued an re-evalued by many, many "soft" scientists. That way, we get closer and closer to an answer that most or all scientists can agree with - which is as close to "objective truth" as it gets. In many ways, the process of creating viable "soft" theories resembles the way theoretical physics works - a lot of smart people with a certain set of data and already-agreed-on theories argue which of their unproven concepts seems to be the best answer to a certain problem.

The main reason why we should not underestimate the value of "soft sciences" is that they try to answer questions in a reasonable way that are asked by most people. Unlike physics and chemistry, almost everyone has theories concerning social and cultural questions. While most people are rather indifferent about string theory, controversial social, cultural and historical questions are discussed in everyday life - be it in the media, in the arts or on a meeting with friends on friday night. Most living people did experience neither World War II nor the Holocaust, but Godwin's law is still very much in effect.

While everybody has theories concerning the field of "soft sciences", many of those theories suffer from errors in reasoning and on common misconceptions. Reducing "soft science" to some kind of second-rate science leads to the idea that, since social and cultural sciences are an "inherently inaccurate" field, any theory in those fields is as good as the other. But that is not true. A theory that has good arguments and that gives good explanations is a better theory. A theory that has contradictions and that ignores evidence to the contrary is a bad theory.

Ultimately, the purpose of this blog is to discuss "soft science" theories, and sometimes to reveal some weaknesses of certain common theories. If answering questions in philosophy, history, sociology and culturology means to do "soft science", we can at least do our best to do good "soft science".

If you find the concept of "hard and soft science" to be even remotely interesting, you might already have checked the wikipedia entry. If you haven't, that's not a problem, as I will quote it anyway:

"Hard science and soft science are colloquial terms often used when comparing fields of academic research or scholarship, with hard meaning perceived as being more scientific, rigorous, or accurate. Fields of the natural, physical, and computing sciences are often described as hard, while the social sciences and similar fields are often described as soft. The hard sciences are characterized as relying on experimental, empirical, quantifiable data, relying on the scientific method, and focusing on accuracy and objectivity." (Source: Wikipedia, 25.05.2011)

Apparently, what makes science "hard" is the scientific method: theories are tested and modified using empirical data and/or experiments. The availability of accurate empirical and experimental data depends heavily on the field of study. If a phenomenon can be repeatedly measured in a way that provides data that can be put in numbers, you have "accurate" empirical data. If you can repeatedly set a certain process in motion, having exactly the same starting position every time, you have an accurate experiment.

Those two things are rather possible in physics, for example - you can build a machine that measures the boiling point of water, a hundred or a thousand times in a row, hence receiving "accurate" data about when the water will reach its boiling point. On the basis of that data, you can predict when the water will boil on your one thousand and first experiment - thus proving your theory about the boiling point of water. If, however, your field of study is human society or culture, it is difficult to get quantifiable numbers, and even more difficult to set up experiments. The number of factors that you have to consider are much higher with people than with atoms, molecules, dirt or bacteria. In our little water-boiling-point-experiment, we might oversee the influence of air pressure on the boiling point - but once we have figured that out, we can compare the boiling point of water in different heights and improve our theory.

To illustrate the difficulty in doing research on humans, I have come up with a purely fictional experiment. We would try to measure people's metaphorical "boiling point" - by testing how often you have to give a person electric shocks until they complain. We have a lot of factors that could be relevant. That particular "boiling point" could depend on age, culture, class, sex, gender, body weight, historical period, mood, you name it - not to speak of biographical factors that are different for every person. We could, for example, shock 10000 middle-aged single women from denmark and get a result of an average 3,7 20-volt-shocks until they complain. A comparing group of 10000 middle-aged single women from france gets a result of an average 5,3 20-volt-shocks until they complain. While those numbers would certainly make for a fun little text in a tabloid, what theory could we construct based on those values? We could assert that french women are tougher - or we could argue that danish women complain earlier. Even if we find a way to keep those two factors apart - for example by making experiments with the same people that make them complain, but without causing any pain - what information do we get? We may conclude that danish middle-aged single women from denmark complain earlier than french middle-aged single women, but how do we explain that? Is it more acceptable in "danish" culture to complain? Do middle-aged people in denmark generally complain more than middle-aged people in france? As we see, even with more or less "quantifiable" studies, we would need to do a LOT of experimenting to establish "facts" about even a very specified "type" of people. Also, any theory based on this fictional experiment would only apply to the time frame in which it was conducted. Who knows, maybe french mainstream culture shifts towards earlier expression of pain in the next decade. People are complicated, and so are culture and society. And how do we define "french" and "danish" anyway? How do we define "woman", "middle-age", "single"? And how do we account for the unknown number of people from both countrys who are not brave (or stupid) enough to participate in an experiment that involves you getting shocked?

This was just one fictional experiment, but it's safe to assume that coming up with reliable ways to classify and measure people and comping up with stable environments for experiments with people is much, much more complicated than with less complex phenomena. Also, there are many things we cannot possibly measure at all - we cannot conduct experiments on people from different eras due to a lack of time travel technology, for example.

So, okay, experiments and empirical data are less accurate and more difficult to compare in social and cultural sciences than in biology, physics or chemistry. Point taken. "Hard sciences" operate with less complex data and can therefore generate and quantify empirical data easier and more precise. Of course, that does not mean that social and cultural sciences are more complex than natural sciences - but their data is more complex. "Using empirical and experimental data" is not a good definition for what science should be. We can argue that "hard sciences" have more objective and accurate data than "soft sciences". But that does not mean that there is no accuracy and no objectivity in "soft sciences". Answering a question scientifically means trying as hard as you can to be accurate and objective - in natural sciences, that means using empirical and experimental data. In social and cultural sciences, that mostly means requiring good reasons and logical proof for everything you say.

It is even wrong to call natural sciences "more accurate" than social and cultural sciences because their main difference is not the method, but the questions. Explaining natural phenomena with the methods of social science would be inaccurate, but it's the same way around. If your question is: "What effect did the invention of the atom bomb have on the american culture in the 1950s", using experiments or huge bulks of quantifiable data will not lead to an answer. Instead, you would have to read and analyse sources like books, magazines, movies, comics etc. As "cultural impact" is difficult to quantify, historians and sociologists would need to carefully construct theories based on those sources, and argue with each other about how important certain aspects are. While we will never get a perfect one-page-answer to a question like that, our theories on social and cultural questions are constantly tested, evalued an re-evalued by many, many "soft" scientists. That way, we get closer and closer to an answer that most or all scientists can agree with - which is as close to "objective truth" as it gets. In many ways, the process of creating viable "soft" theories resembles the way theoretical physics works - a lot of smart people with a certain set of data and already-agreed-on theories argue which of their unproven concepts seems to be the best answer to a certain problem.

The main reason why we should not underestimate the value of "soft sciences" is that they try to answer questions in a reasonable way that are asked by most people. Unlike physics and chemistry, almost everyone has theories concerning social and cultural questions. While most people are rather indifferent about string theory, controversial social, cultural and historical questions are discussed in everyday life - be it in the media, in the arts or on a meeting with friends on friday night. Most living people did experience neither World War II nor the Holocaust, but Godwin's law is still very much in effect.

While everybody has theories concerning the field of "soft sciences", many of those theories suffer from errors in reasoning and on common misconceptions. Reducing "soft science" to some kind of second-rate science leads to the idea that, since social and cultural sciences are an "inherently inaccurate" field, any theory in those fields is as good as the other. But that is not true. A theory that has good arguments and that gives good explanations is a better theory. A theory that has contradictions and that ignores evidence to the contrary is a bad theory.

Ultimately, the purpose of this blog is to discuss "soft science" theories, and sometimes to reveal some weaknesses of certain common theories. If answering questions in philosophy, history, sociology and culturology means to do "soft science", we can at least do our best to do good "soft science".

Abonnieren

Posts (Atom)